Force field reconstruction¶

To reconstruct a force field with sgdml, you need a dataset file, which can be generated by importing from various file formats (data preparation). If you just want to play around, feel free to continue with one of our ready-to-go benchmark datasets.

Note

Model training is a expensive task that is best executed on a powerful computer. Consider our online training service, if you do not have access to sufficient compute resources.

Automatic (via the CLI)¶

The easiest way to create a force field (which we sometimes simply refer to as model) is via the automated assistant through the CLI:

$ sgdml all ethanol.npz <n_train> <n_validate> [<n_test>]

Once the program finishes, it will output a fully trained and cross-validated model file. The parameters <n_train>, <n_validate> and optionally <n_test> specify the sample sizes for the training, validation and test datasets, respectively. All of them are taken from the provided bulk dataset (here: ethanol.npz), without overlap. If no test dataset size is specified, all points excluding the training and validation subsets are used for testing.

Tip

If the reconstruction process is terminated prematurely, the command above can simply be reissued (in the same directory) to resume.

Manual (via the CLI or Python API)¶

In the example above, we have used the CLI command all to automatically run through all steps necessary to reconstruct a force field. However, each of those steps (as described here) can also be triggered individually.

Python API¶

The same fine-grained functionality is also exposed via the Python API, which is particularly useful when developing new models based on this existing sGDML implementation.

Here is how to train one individual model (without cross-validation or testing as with the assistant used above) for a particular choice of hyper-parameters sig = 20 and lam = 1e-10:

import sys

import numpy as np

from sgdml.train import GDMLTrain

dataset = np.load('d_ethanol.npz')

n_train = 200

gdml_train = GDMLTrain()

task = gdml_train.create_task(dataset, n_train,\

valid_dataset=dataset, n_valid=1000,\

sig=20, lam=1e-10)

try:

model = gdml_train.train(task)

except Exception, err:

sys.exit(err)

else:

np.savez_compressed('m_ethanol.npz', **model)

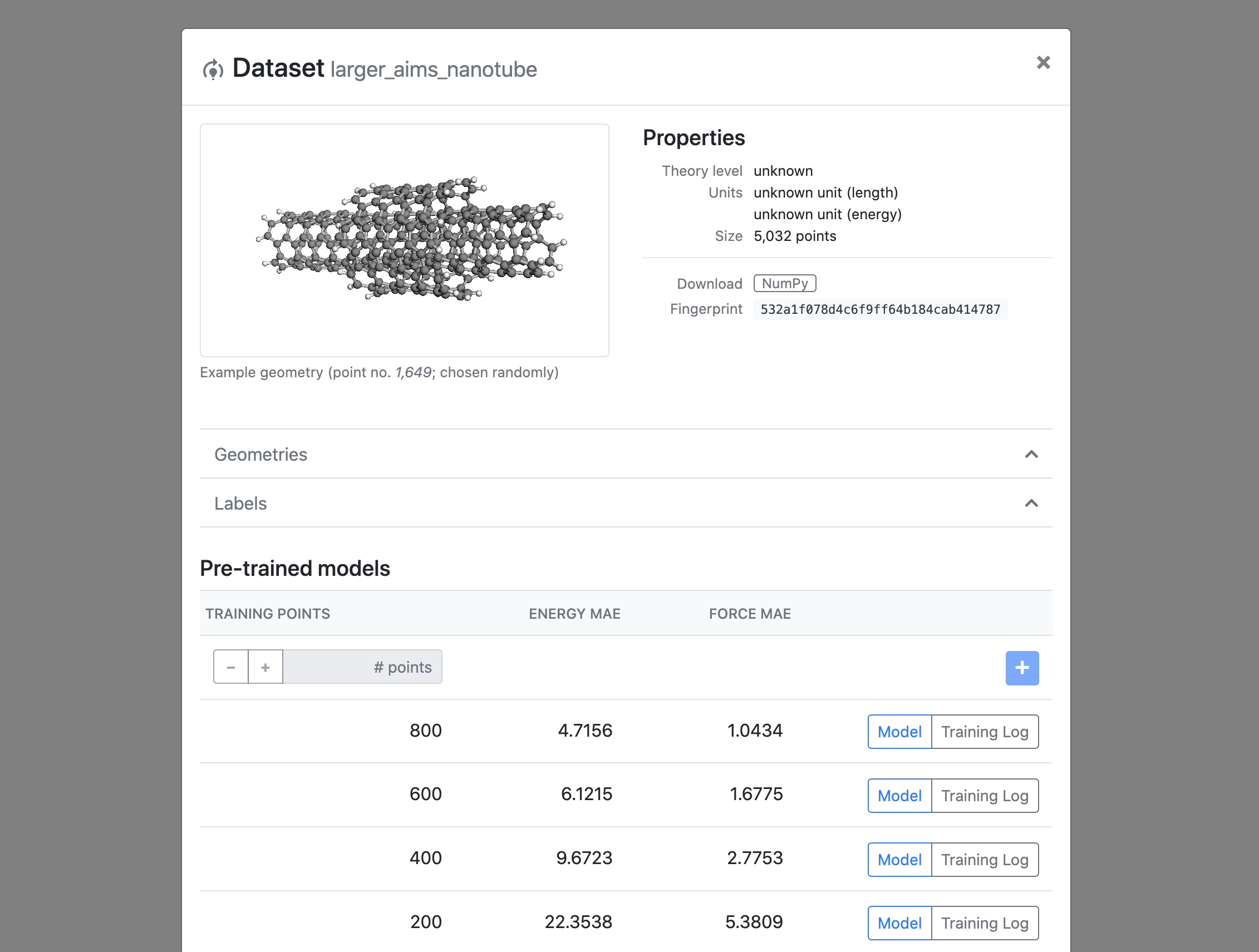

Online (via www.sgdml.org)¶

We also offer an experimental model training service for anyone without sufficient compute resources. Simply upload your dataset to our website, schedule some training jobs and return later to collect your model files, which can be queried offline.

Frequently asked questions¶

How to choose suitable training, validation and test subset sizes?¶

The size of the training set <n_train> is the most important parameter: a larger value will yield a more accurate model, but at the cost of increased training time and memory demand. Increasing the size of the validation and test dataset carries no such penalty. In fact, it is desirable to use large test datasets to get a reliable estimate for the generalization performance of the trained model.

Tip

Start from a small training set size of around a hundred geometries to get a feel for the reconstruction performance as well as the relevant hyper-parameter ranges and then work your way up.

How are the training, validation and test subsets sampled from the provided bulk dataset?¶

Our sampling scheme aims to mirror the energy distribution of the original dataset in each of the respective subsets (e.g. the Boltzmann distribution in case of MD trajectories). This is particularly important for very small samples where a random selection will likely not cover the whole energy range of the original dataset.

The exception are datasets with missing energy labels, in which case the subsets are chosen without any such guidance.

Note

Please note that over-represented regions in your original dataset will thus also be over-represented in the training dataset. Consequently, the corresponding model will perform particularly well in those areas, which might or might not be desired. E.g. a model reconstructed from a MD trajectory will be very well suited for MD simulations (which heavily sample around minimas) but might not be optimal for surface exploration tasks that focus on transition states.